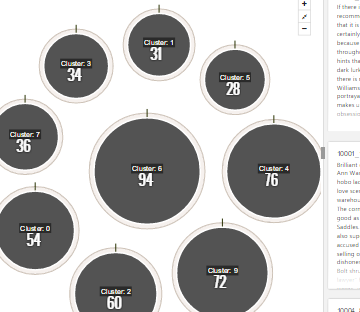

Clustering is one method to make sense of unstructured text (e.g.: comments, product reviews, etc.). Clustering is an unsupervised machine learning method where the end result is not known in advance. In this particular example, clustering groups similar text together and speeds the rate at which it can be reviewed.

In a future post I’ll cover stemming and why it’s needed.

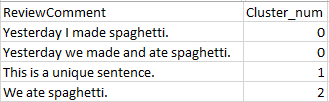

The sample code below produces a .CSV output file like the image below.

This .CSV can be imported into your visualization tool of choice or merged with other data.

The overall process for the sample code below is

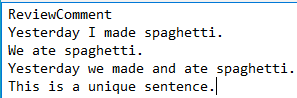

All that’s needed is an input text file that looks like the one below.

In the sample code below this file is referred to as: input_file = ‘.\TextInput.txt’. The code treats this file as a .CSV file so remove all commas from the file before running.

The “ReviewComment” column header is needed in the input_file in case there are multiple columns, it simply looks for this column and ignores the rest.

K-Means clustering is the clustering method used below. K-Means chooses a random centroid each time it runs, therefore it could assign the input data to different clusters when re-run. It works best on data than is spherical and doesn’t overlap. Also, it needs the number of clusters to be specified in advance (see the elbow method for details). It’s best to determine the number of clusters separately using the elbow method then update the “num_clusters” variable in the code below to match the elbow you choose.

All code below is for illustrative purposes only and is unsupported.

# —

# Libs

import re

from nltk.corpus import stopwords

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.cluster import KMeans

import pandas as pd

# —

# Globals

input_file = ‘.\TextInput.txt’

# Manually specify the number of clusters after the elbow method has been used separately.

num_clusters = 3

# —

# Funcs

def cleanString(incomingString):

# Purpose: Cleans the input string.

# Add or remove statements below as necessary depending on how much cleaning

# needs to be done.

newstring = incomingString

newstring = re.sub(‘<[^>]*>’, ‘ ‘, newstring)

newstring = newstring.lower()

newstring = newstring.encode(‘ascii’, ‘ignore’).decode(‘latin-1’)

#newstring = newstring.replace(“!”,””)

newstring = newstring.replace(“@”,””)

newstring = newstring.replace(“#”,””)

newstring = newstring.replace(“$”,””)

#newstring = newstring.replace(“%”,””)

newstring = newstring.replace(“^”,””)

newstring = newstring.replace(“&”,”and”)

#newstring = newstring.replace(“*”,””)

#newstring = newstring.replace(“(“,””)

#newstring = newstring.replace(“)”,””)

#newstring = newstring.replace(“+”,””)

#newstring = newstring.replace(“=”,””)

#newstring = newstring.replace(“?”,””)

newstring = newstring.replace(“\'”,””)

newstring = newstring.replace(“\””,””)

#newstring = newstring.replace(“{“,””)

#newstring = newstring.replace(“}”,””)

#newstring = newstring.replace(“[“,””)

#newstring = newstring.replace(“]”,””)

newstring = newstring.replace(“<“,””)

newstring = newstring.replace(“>”,””)

newstring = newstring.replace(“~”,””)

newstring = newstring.replace(“`”,””)

#newstring = newstring.replace(“:”,””)

#newstring = newstring.replace(“;”,””)

newstring = newstring.replace(“|”,””)

newstring = newstring.replace(“\\”,””)

newstring = newstring.replace(“/”,””)

#newstring = newstring.replace(“-“,””)

newstring = newstring.replace(“_”,””)

#newstring = newstring.replace(“br”,””)

#newstring = newstring.replace(“,”,””)

#newstring = newstring.replace(“.”,””)

return newstring

def createStopWords():

# Purpose: Use the stop words provided by Python and add additional stop words as needed.

stopWords = stopwords.words(‘english’)

moreStopWords = [‘didnt’, ‘doesnt’, ‘dont’, ‘got’, ‘im’, ‘ive’, ‘wont’]

stopWords = stopWords + moreStopWords

return stopWords

# —————

# Main

stopWords = []

stopWords = createStopWords()

#Read local data into a data frame (DF).

inputDF = pd.read_csv(input_file, low_memory=False, encoding = “ISO-8859-1”)

# Clean input text then put it back in the same DF under a different column name.

inputDF[‘ReviewCommentCleaned’] = inputDF[‘ReviewComment’].apply(cleanString)

vectorizer = TfidfVectorizer(stop_words=’english’)

tfidf_matrix = vectorizer.fit_transform(inputDF[‘ReviewCommentCleaned’].tolist())

vector_dict = {}

vector_dict = vectorizer.vocabulary_

#print(“vector_dict:\n”, vector_dict)

# This is really the header for the tfidf_matrix

print(“\ntfidf_matrix term header and values:\n”)

terms = vectorizer.get_feature_names()

print (terms)

# Shows TF IDF results for each term.

print(tfidf_matrix.toarray())

print (‘\nNum_clusters to be used:’, num_clusters)

km = KMeans(n_clusters=num_clusters, init=’k-means++’, n_init=1, max_iter=100)

km.fit(tfidf_matrix)

# Add the cluster number to each comment back to the original DF.

clusters = km.labels_.tolist()

inputDF[‘Cluster_num’] = clusters

#inputDF = inputDF.sort_values([‘Cluster_num’])

print(“\nFile_inputDF:\n”, inputDF)

# Write the results to a CSV.

output_extension = []

output_extension = “_Cluster2.csv”

output_file = input_file + output_extension

print(“\nWrote results to the output file: “, output_file)

inputDF.to_csv(output_file, sep=’,’, encoding=’utf-8′)

Extra resources:

Here is the full list of scikit-learn clustering algorithms.

Here is a visual representation of K-Means clustering in action.